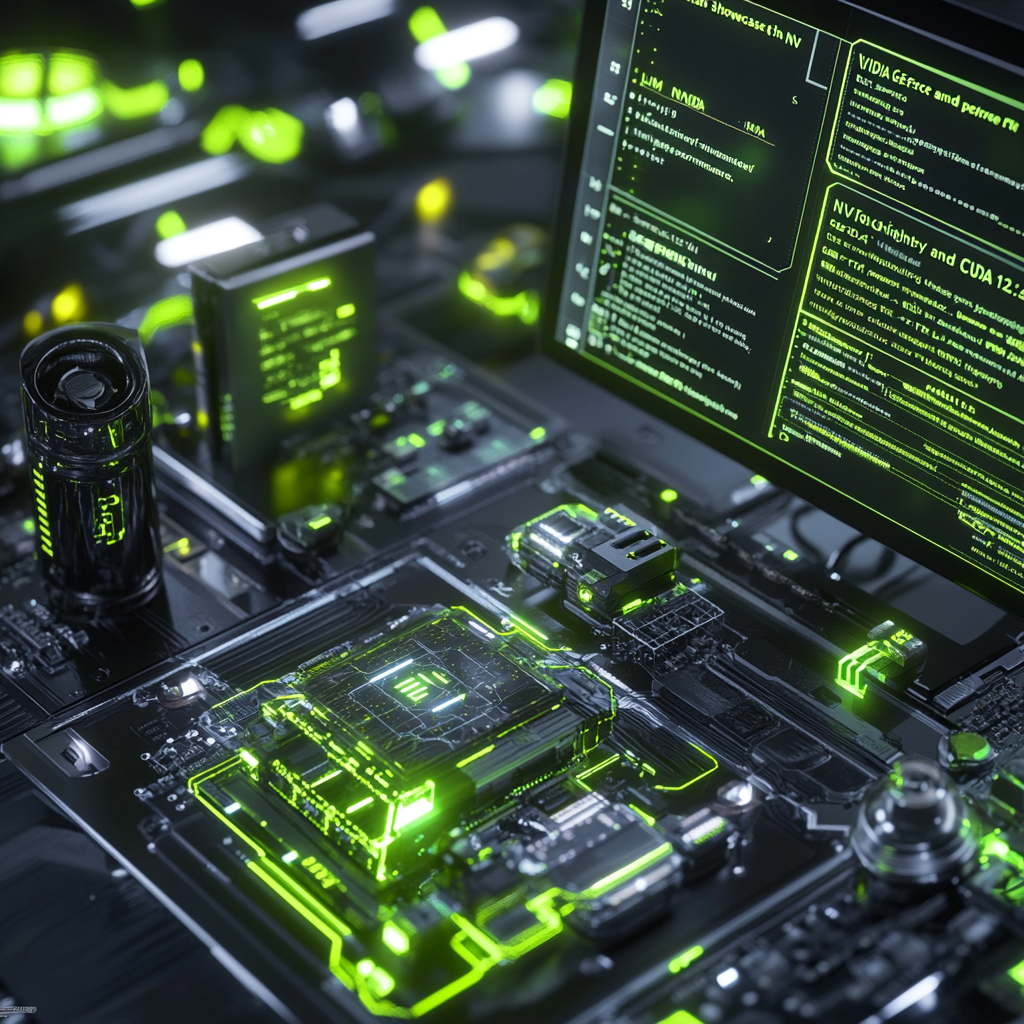

Boosting LLM Efficiency with NVIDIA GeForce RTX and CUDA 12.8 in LM Studio

Alright, my curious companions, fasten your seatbelts because we’re about to embark on a breathtaking journey into the world of AI, specifically the realm where LM Studio meets the gloriously powerful NVIDIA GeForce RTX. We’re chasing performance gains that previously resided only in the dreams of tech aficionados and coding wizards. Imagine, if you will, effortlessly running the most cutting-edge large language models (LLMs) right from your desktop, achieving faster response times while enjoying smooth, delightful AI interactions. This isn’t just some far-flung fantasy — it’s here, and it’s astonishingly accessible.

Let’s dive into the wondrous abyss of LM Studio. The shining star of this tale is the GPU offloading feature. Think of it as a superhero cape for your computer that helps small-time heroes (like those with modest GPUs) tackle gigantic tasks. It artfully redistributes the weighty burdens between your GPU and CPU, ensuring that even if your GPU isn’t top of the line, you can still revel in speedy performance as if you were wielding an RTX 4090. Let’s be honest; how many of us need to fork out our life savings for the latest hardware? With LM Studio, you can run substantial models like Gemma 2 27B, which usually demands a whopping 19 GB of VRAM, on mere mortals, thanks to this magical offloading wizardry.

Now, you might wonder: What on earth makes this combination so powerful? Cue the drumroll for the mighty CUDA 12.8. This is the cutting-edge key that unlocks superior performance on those magnificent NVIDIA GeForce RTX GPUs. Updating to this shiny version allows LM Studio to embrace the entire spectrum of RTX AI PCs, from the slender elegance of laptops to the muscle-flexing high-performance desktops. The result? Expect faster loading times, smoother inferences, and response times that could rival a cheetah on a caffeine high! The latest LM Studio 0.3.15 update even flings open the doors to those newly-minted RTX 50-series GPUs, bringing advanced LLMs to the eager masses.

But can we quantify this enhanced experience? Certainly! Consider this exhilarating revelation: When you run a quantized 7B model, which usually cozies up in around 4-7 GB of VRAM (the kind you’d find in many a laptop), the performance edge becomes remarkably clear. Take a GeForce RTX 4080: it’s capable of delivering a staggering 78 tokens per second. Conversely, the best efforts of an Intel i7 14700k CPU yield just 9.8 tokens per second. It's not merely about raw computational muscle; it’s about transforming the AI interaction into a seamless, rewarding experience that makes your creative brain tingle with delight.

Let’s not forget, in this whirlwind of performance and graphics, there lies a goldmine of environmental considerations and privacy virtues. LM Studio prides itself on keeping your data close to home. Unlike those cloud services that basically beam your secrets off to the great unknown, LM Studio anchors you in safety. Your data is securely local, allowing you to revel in all the perks of AI acceleration without the anxiety of someone peeking at your personal musings. Plus, it’s a gift from the tech gods, free for personal use, and doesn’t play favorites — it supports both NVIDIA and AMD GPUs. Flexibility is the name of the game.

Now, let’s talk about stepping into this world of AI wizardry. LM Studio is crafted with utmost care for user-friendliness. It’s not a labyrinth of complexity; rather, it’s an easygoing stroll down the park, making it delightful for anyone eager to delve into local AI modeling. Whether you’re summoning the powers of Windows, macOS, or even Linux, a mere click will conjure up your local inference server. Say goodbye to the fuss with convoluted prompt formatting; LM Studio presents a slick interface that feels like a friendly guiding hand. Are you a curious novice dipping your toes in the vast ocean of AI? This tool is your surfboard.

To experience the awe-inspiring capabilities of LM Studio in full bloom, ensure that your NVIDIA driver is compatible and make sure you possess a worthy GPU. Click your way over to the LM Studio website and download it today. Discover a realm where your AI workflows can flourish effortlessly right at home, and say goodbye to sluggish processing speed.

As we hover above the horizon of possibilities unveiled by LM Studio and NVIDIA GeForce RTX, we can’t help but feel a sense of excitement for the future of AI. It’s a thrilling time for developers, researchers, and curious minds alike. So why not embrace these technological marvels? Explore the enchanting world of large language models, and let them lead you to unexpected places.

Before we part ways, let’s take a moment to reflect. The rapid evolution of technology can feel overwhelming, but staying informed is your best weapon against the tides of confusion. So, if you wish to bask in the comforting glow of knowledge about the latest advancements in AI, don’t hesitate! Subscribe to our Telegram channel at @ethicadvizor and keep your finger on the pulse of emerging tech news. Join us on this exhilarating journey into the future, where innovation and inspiration collide, and let’s explore together!