Like humans, ChatGPT favors examples and ‘memories,’ not rules, to generate language

ChatGPT and the Art of Language Generation: A Closer Look

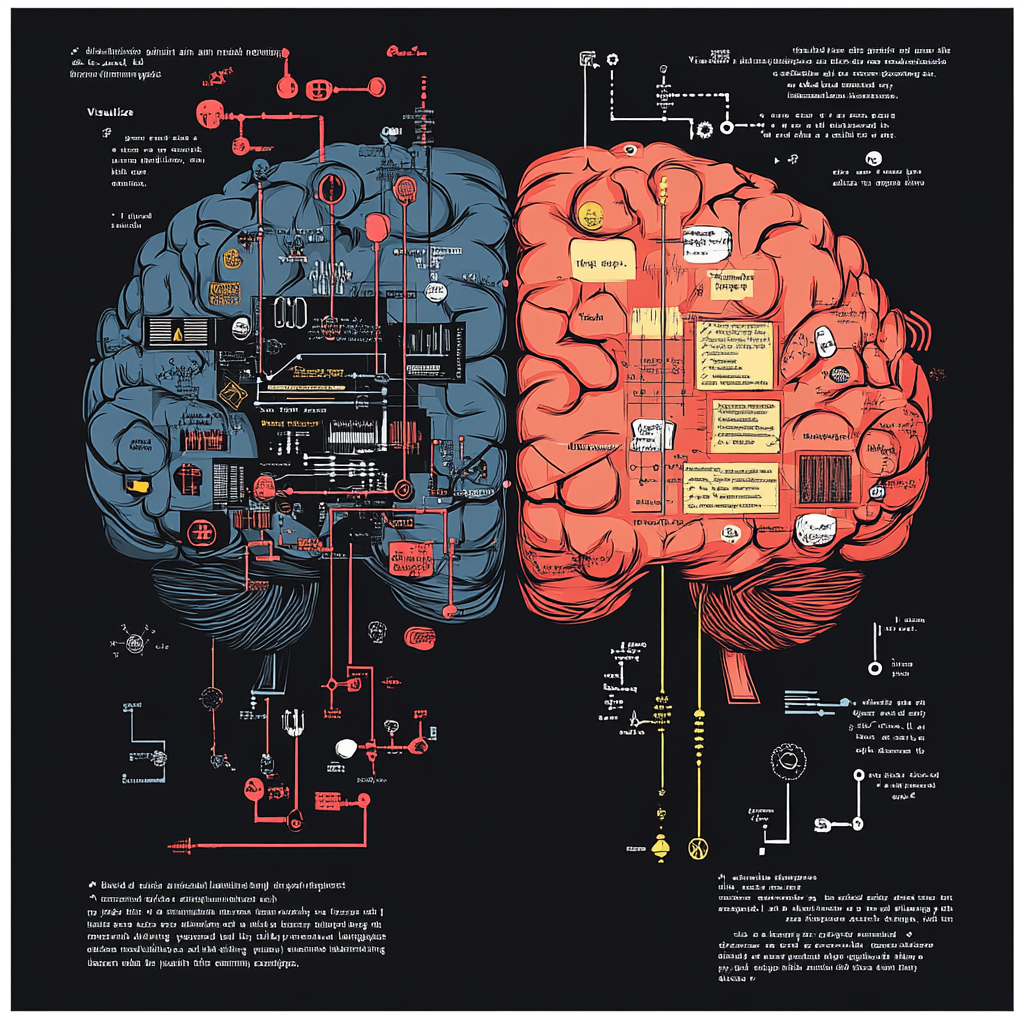

Let’s dive into a thought-provoking expedition where we unravel how large language models (LLMs) like ChatGPT craft their linguistic magic. Picture this: a team of researchers from the University of Oxford and the Allen Institute for AI just dropped a bombshell that redefines the AI’s word-wizardry, and guess what? This revelation mirrors how us humans whip up language. Rather than marching to the beat of rigid rules, these clever contraptions like ChatGPT are using something akin to memory and examples—think of it as a very advanced form of analogical reasoning. So, let’s shed some light on this captivating interplay of language creation between humans and machines.

A Glimpse at the Research

The crème de la crème of this revelation was recently published in the Proceedings of the National Academy of Sciences. Researchers took a good look at GPT-J, an open-source language model that’s got some serious similarities to ChatGPT, particularly in how it manipulates the English lexicon. They dug into the fun world of word formation, specifically transforming adjectives into nouns with those magical suffixes like -ness (as in “happy” to “happiness”) or -ity (hello, “available” becomes “availability”).

But here’s where the fun began: to really test the waters, the researchers tossed 200 totally made-up adjectives into the ring—think whimsical creations like “cormasive” and “friquish.” Our hero, GPT-J, was challenged to turn these linguistic oddities into nouns by selecting the perfect suffix. Then, to make things spicy, they compared the model’s outputs not only to human responses but also against predictions from two different cognitive models—one that strictly applied linguistic rules and another that operated on good old analogies.

Breaking Down the Key Findings: Forget the Rules, Embrace the Examples

- Memory Over Mechanics: Instead of a rigid rulebook, this model leans on what it’s stored away in its metaphorical filing cabinet. It relies heavily on previous examples to make decisions, much like how we unconsciously pull from our own experiences.

- A Human Touch: The way GPT-J twists suffixes reflects an analogical reasoning that humans also flaunt. We both draw on those glittering examples in our minds rather than muddling through convoluted rules and grammar guides.

- Processing Differences: Here’s the kicker: the paths we take diverge. While humans tap into a rich tapestry of semantic understanding and cultural contexts, these AI engines are pattern-matching machines, navigating through oceans of data to find statistical probabilities that fit the bill.

These findings send a clear message: language models don’t emerge from some deep understanding of grammar. Instead, they are seasoned performers, stitching together phrases and ideas from a treasure trove of memorized instances, dancing around the rules of language like pros at a ballroom brawl.

The Battle of Human Language vs. AI Generation

When it comes to linguistic creativity, humans shine bright like diamonds. We’re often weaving new words and meanings, inspired by the social fabric and the nuances around us. Our interactions are seasoned with experiences and intentions, making our communication not only flexible but also profoundly rich and meaningful.

Conversely, LLMs like ChatGPT are sort of the linguistic equivalent of a karaoke night. They can belt out catchy tunes based on what they’ve learned, but there’s no soul in the performance. They churn out words and phrases that sound spot-on and relevant because they’ve gleaned them from patterns found in boatloads of text, but they lack the beautiful depth that characterizes human conversation.

What This All Means for AI Development and Linguistics

- AI Meets Cognitive Science: Understanding that LLMs engage in analogical thinking paves the way for fresh collaborations between linguistic theories and AI development. We’re moving into a new arena!

- Next-Level Language Models: This newfound knowledge could prove beneficial for refining LLMs. Imagine integrating the wisdom of grammatical rules with the flexibility of example-driven learning. Now that’s a recipe for success.

- Smart Prompt Engineering: Grasping this dynamic means users can craft prompts that play to the AI’s strengths. Offering well-chosen examples will lead to outputs that resonate more closely with the intended voice or flavor.

A Practical Takeaway

If you’re dabbling with ChatGPT or any similar AI model, recognizing its penchant for examples can be your secret weapon. When composing your prompts, throw in specific, relevant examples! It’s like giving the model a cheat sheet that leads to far richer responses. Want a particular style or structure? Be direct! Add illustrative instances to guide your AI companion. The results can be quite remarkable.

For example, if you crave specific formatting or a distinct tone, sprinkle in some examples in your prompts. You’ll be amazed at how clearly the AI can respond when it knows exactly what vibe or structure you’re aiming for. Think of it as a collaborative dance, where the more context you provide, the more in sync you’ll be.

Wrapping It Up

This trailblazing research has opened our eyes to the way ChatGPT and its fellow LLMs dance through language generation—not with the rigid movements of rule-following, but through the fluidity of analogical reasoning and memorized examples. This nuanced understanding draws parallels to human cognitive strategies while shining a light on the differences in our methods. So, as we march forward into a world intertwined with AI language technologies, grasping these dynamics enriches our comprehension and empowers us to better use conversational agents.

Want to stay up to date with the latest news on neural networks and automation? Subscribe to our Telegram channel: @channel_neirotoken