Vision-language models can’t handle queries with negation words, study shows

Vision-Language Models: The Battle of Negation

Alright, let’s dive into the wild world of Vision-Language Models (VLMs). These bad boys are making waves in the AI field, smoothly analyzing images and cracking the code on their meanings through text. So what do you get when you take a massive pile of photos and their accompanying captions? A VLM! It generates snazzy vector representations that harmonize visual and textual information. However, there’s a wrinkle in this smoothly knitted tapestry: negation. No, not the kind that comes with a warning in your favorite video game after you mess up; I’m talking about the pesky little words like "no" and "not."

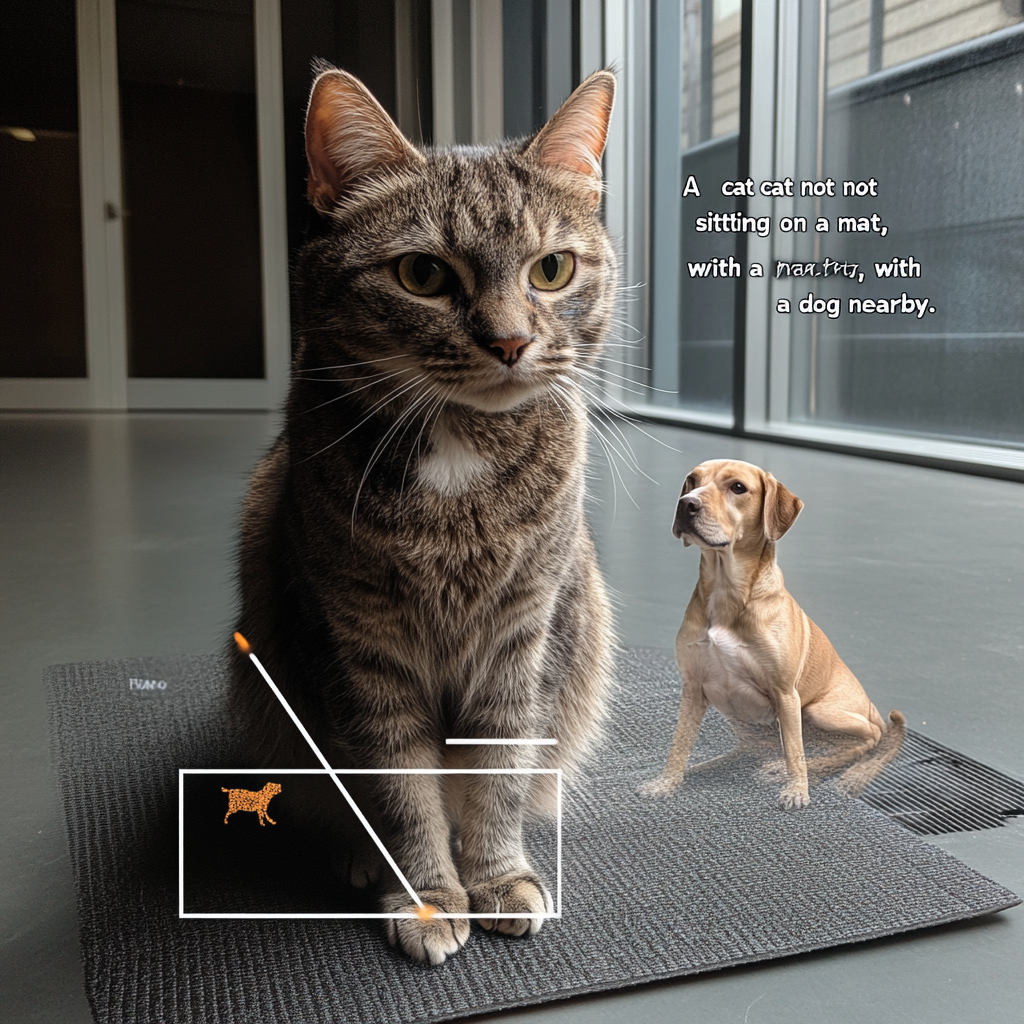

Now, if you think that’s just a small hiccup, think again. This is serious business, especially in contexts where missing the mark can lead to real-world consequences, like medical imaging or search engines. Picture this: you bark at a VLM to fetch pictures of a dog leaping over a fence without helicopters buzzing around. Seems straightforward, right? Well, good luck with that. The VLM might just sashay back with some images where helicopters are very much included. How’s that for frustrating?

The Roots of the Negation Problem

Why can’t these VLMs simply pick up on what we’re putting down? The problem lies at the heart of the training sets they feast on. The majority of image-caption pairs in their training data are squeaky clean and don’t touch on negation. So, when the model encounters captions saying things like “a dog jumping over a fence,” phrases that inject “without helicopters” are conspicuously absent. As a result, VLMs don’t quite learn to parse affirmative statements against negative ones. If you want a VLM to recognize the difference, well, it’s like teaching a goldfish to ride a bicycle—noble endeavor, poor execution.

Tackling the Negation Conundrum

Enter the brains of the operation—researchers with a knack for tackling problems head-on. They’ve devised some clever benchmarks, like the NegBench, which tests VLMs on their grasp of negation across different tasks. Think of it as a sort of academic exam for these models. It includes Retrieval with Negation and Multiple Choice Questions with Negated Captions for those moments where your VLM needs an extra nudge of clarity. NegBench boasts a whopping 79,239 examples, sweeping through images, videos, and medical data. That’s a buffet of knowledge for our dear VLMs.

But if creating data sets wasn’t enough, researchers have been busy fine-tuning models such as CLIP on synthetic datasets where negation has a starring role. This method has shown promise. The VLMs started performing better when it came to queries involving negation. Think of it as VLMs finally hitting the gym and working on that weak spot. They’re coming back stronger with improved recall and accuracy.

Looking Ahead: The Future of VLMs

Now, before we pop the champagne over these developments, let’s keep our expectations in check. While some solutions are on the table, they aren’t without their quirks. The real ornery challenge is fundamentally overhauling how VLMs comprehend negation in the first place, which may call for some serious architectural reworking or the deployment of even more sophisticated training methods. Researchers are diligently polishing different models, and they’re being quite the busy bees I must say. In the meantime, it's vital for users to grasp these limitations and design tests that are prepared to challenge our friends before they hit the market.

Conclusion

So, what have we learned? The inability of vision-language models to juggle negation isn’t just a quirky detail—it’s a massive roadblock that impacts how well they function and how reliably they provide results. As artificial intelligence moves and shakes its way forward, solving the riddle of negation will pave the way for VLMs that can keep up with the complex questions we throw their way. The implications stretch far across fields, from healthcare to content moderation.

If you’re keen on staying sharp and updated on the nuances of neural networks and automation, why not join the movement? Subscribe to our Telegram channel: @channel_neirotoken. Our virtual door is always open for curious minds ready to feast on the latest and greatest in AI!